ハルビン工業大学(深圳)• 2024 • 入門計量経済学 Homework & Lab • における解決策 • HITSZ 基础计量经济学作业 • 实验 2024

当サイト内のコンテンツの無断転載、引用、コピーは禁止されています。

For those titles or questions with at least one ‘+’ mark, it shows that the corresponding part is of the course numbered “ECON2010” as an extra part than “ECON2010F”, which is an easier alternative Introductory Econometrics course.

Homework 1

1 : [15 points : Theory]

Remind yourself of the terminology we developed in Chapter 1 for causal questions. Suppose we are interested in the causal effect of having health insurance on an individual’s health status.

(a) [2 points] We run a phone survey where we ask 5,000 respondents about their current insurance and health conditions. The data we collect is an example of a __________.

(b) [2 points] The US government has Census data on every elderly American’s current insurance and health status. This is an example of data for the __________.

(c) [2 points] Suppose we take our phone survey data and calculate the difference in health between individuals who do and do not have insurance. This difference is an example of an __________.

(d) [4 points] The difference in health between all Americans who do and don’t have insurance is an example of an __________. The effect of insurance on health is an example of a __________.

(e) [5 points] When the two objects in (d) coincide, we have an example of __________. Give one reason why the two objects in (d) might not coincide.

Solution to (a)

sample

Solution to (b)

population

Solution to (c)

estimate (or estimator)

Solution to (d)

estimand

(target) parameter

Solution to (e)

identification

We might expect richer individuals to be more likely to have health insurance and more likely to be healthy for other reasons. In this case the difference in health of Americans with/without health insurance is likely to overstate the causal effect of insurance (upward selection bias).

2 : [25 points : Theory]

Let $Y=a+X^{3}/b$ where $a$ and $b$ are some constants with $b>0$, and where $X\sim\mathrm{N}(0,1)$.

(a) [2 points] State the definition of the cumulative density function of $Y$, which we’ll call $F_{a,b}(y)$.

(b) [5 points] Express $F_{a,b}(y)$ in terms of the CDF of the standard normal distribution $\Phi(\cdot)$. Hint: can you re-write the inequality $Y\le y$ as an inequality involving $X$?

(c) [3 points] Express $E[Y]$ in terms of $E[X^{3}]$, then use the fact that $E[X^{3}]=0$ when $X\sim\mathrm{N}(0,1)$ to derive $E[Y]$.

(d) [4 points] Express $Cov(Y,X)$ in terms of $E[X^{4}]$, then use the fact that $E[X^{4}]=3$ when $X\sim\mathrm{N}(0,1)$ to derive $Cov(Y,X)$.

(e) [2 points] Suppose $E[Y]=0$ and $Cov(Y,X)=0.3$. What can you conclude about $a$ and $b$?

(f) [6 points] Given your answers to (b) and (e), what is the probability that a draw of $Y$ is bigger than zero? What is the probability that a draw of $Y$ falls between $-0.1$ and $0.1$?

(g) [3 points] Let $W=a+X^{3}/b+Z$ where $Z$ is mean-zero and independent of $X$. How does the distribution of $E[W\mid X]$ (recall this is a random variable) compare to the distribution of $Y$?

Solution to (a)

By definition, $F_{a,b}(y)=Pr(Y\le y)$.

Solution to (b)

We have\begin{align*} Y\le y & \iff a+X^{3}/b\le y\\ & \iff X\le\sqrt[3]{b(y-a)} \end{align*}using the facts that $b>0$ and that $f(x)=x^{3}$ is increasing. Thus $Pr(Y\le y)=Pr(X\le\sqrt[3]{b(y-a)})=\Phi(\sqrt[3]{b(y-a)})$.

Solution to (c)

$E[Y]=E[a+X^{3}/b]=a+E[X^{3}]/b$ by linearity of expectations. So with $E[X^{3}]=0$, $E[Y]=a$.

Solution to (d)

Since Formula $E[X]=0$,\begin{align*}Cov(Y,X) & =E[YX]\\ & =E[aX+X^{4}/b]\\ & =abE[X]+E[X^{4}]/b\end{align*}by linearity of expectations. With $E[X^{4}]=3$ and again $E[X]=0$, we thus have Formula $Cov(Y,X)=3/b$.

Solution to (e)

If $E[Y]=0$ we know from (c) that $a=0$. If further $Cov(Y,X)=0.3$ we know from (d) that $3/b=0.3$ or $b=10$.

Solution to (f)

Given (b), \begin{align*}Pr(Y>0) & =1-Pr(Y\le0)\\ & =1-\Phi(\sqrt[3]{b(0-a)}).\end{align*}Plugging $a=0$ into this expression yields \begin{align*}Pr(Y>0) & =1-\Phi(\sqrt[3]{b(0-0)})\\ & =1-\Phi(0)\\ & =0.5.\end{align*}Similarly, plugging in both $a=0$ and $b=10$,\begin{align*}Pr(-0.1\le Y\le0.1) & =Pr(Y\le0.1)-Pr(Y\le-0.1)\\ & =\Phi(\sqrt[3]{b(0.1-a)})-\Phi(\sqrt[3]{b(-0.1-a)})\\ & =\Phi(\sqrt[3]{10\times0.1})-\Phi(\sqrt[3]{10\times-0.1)})\\ & =\Phi(1)-\Phi(-1)\\ & \approx0.84-0.16\\ & =0.68.\end{align*}

Solution to (g)

We have \begin{align*}E[W\mid X] & =E[a+X^{3}/b+Z\mid X]\\ & =a+X^{3}/b+E[Z\mid X]\\ & =a+X^{3}/b\\ & =Y\end{align*}since $E[Z\mid X]=E[Z]=0$. Thus $E[W\mid X]$ and $Y$, being equal, have the same distribution.

3 : [25 points : Empirics]

Let’s prove your answer to 2(d) by simulation.

(a) [6 points] Create a Stata program that generates a dataset with $N=10,000$ independent draws of a standard normal variable $X_{i}\stackrel{iid}{\sim}\mathcal{\mathrm{N}}(0,1)$, generates $Y_{i}=a+X_{i}^{3}/b$ for the values of $a$ and $b$ you found in 2(e), and computes the sample covariance $\widehat{Cov}(X_{i},Y_{i})$. Run the program a few times. How does this exercise build confidence in your answer to 2(d)?

(b) [5 points] Run the same program once with $N=10$. Does the result shake your confidence in your answer to 2(d)? Explain.

(c) [8 points] Modify your program to automatically compute and store $500$ simulated values of $\widehat{Cov}(X_{i},Y_{i})$ with $N=10$ after fixing the seed to $1630$. Report the average simulated value. How does it compare to what you’d expect from your answer to 2(d)?

(d) [6 points] How does the mean and variance of the $500$ simulated $\widehat{Cov}(X_{i},Y_{i})$ change as you increase $N$ from $10$ to $100$? What do you expect to happen as you increase $N$ further?

Solution to (a)

set matsize 5000

set seed 12345

forval rep=1/5 {

clear

set obs 10000

gen X=rnormal()

gen Y=0+X^3/10

corr X Y, cov

}The output:

. set matsize 5000

. set seed 12345

. forval rep=1/5 {

2. clear

3. set obs 10000

4. gen X=rnormal()

5. gen Y=0+X^3/10

6. corr X Y, cov

7. }

number of observations (_N) was 0, now 10,000

(obs=10,000)

| X Y

-------------+------------------

X | .993742

Y | .300913 .153776

number of observations (_N) was 0, now 10,000

(obs=10,000)

| X Y

-------------+------------------

X | 1.01913

Y | .316717 .164776

number of observations (_N) was 0, now 10,000

(obs=10,000)

| X Y

-------------+------------------

X | 1.00079

Y | .298588 .146994

number of observations (_N) was 0, now 10,000

(obs=10,000)

| X Y

-------------+------------------

X | 1.00011

Y | .297844 .145352

number of observations (_N) was 0, now 10,000

(obs=10,000)

| X Y

-------------+------------------

X | 1.00243

Y | .301918 .152687After setting the seed to $12345$, $a=0$, and $b=0.3$, I ran my program five times and got sample covariances of $0.301$, $0.317$, $0.299$, $0.298$, and $0.302$. These are all somewhere around the $0.3$ I expected from the above.

Solution to (b)

set seed 12345

forval rep=1/1 {

clear

set obs 10

gen X=rnormal()

gen Y=0+X^3/10

corr X Y, cov

}The output:

. set seed 12345

. forval rep=1/1 {

2. clear

3. set obs 10

4. gen X=rnormal()

5. gen Y=0+X^3/10

6. corr X Y, cov

7. }

number of observations (_N) was 0, now 10

(obs=10)

| X Y

-------------+------------------

X | 1.06192

Y | .586814 .436831With the same seed and parameter values I get now a sample covariance of $0.587$, which is very different from $0.3$. But I’m not too worried about it, since this simulation uses a small sample. We expect by chance the sample covariance to be far from the “population” covariance.

Solution to (c)

set seed 1630

matrix results=J(500,1,.)

forval rep=1/500 {

clear

qui set obs 10

gen X=rnormal()

gen Y=0+X^3/10

qui corr X Y, cov

matrix results[`rep',1]=r(cov_12)

}

clear

svmat results

summThe output:

. set seed 1630

. matrix results=J(500,1,.)

. forval rep=1/500 {

2. clear

3. qui set obs 10

4. gen X=rnormal()

5. gen Y=0+X^3/10

6. qui corr X Y, cov

7. matrix results[`rep',1]=r(cov_12)

8. }

. clear

. svmat results

number of observations will be reset to 500

Press any key to continue, or Break to abort

number of observations (_N) was 0, now 500

. summ

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

results1 | 500 .2975147 .2769293 .0058428 1.721521

I get an average sample covariance of $0.298$, which is again close to the expected $0.3$.

Solution to (d)

set seed 1630

matrix results=J(500,1,.)

forval rep=1/500 {

clear

qui set obs 100

gen X=rnormal()

gen Y=0+X^3/10

qui corr X Y, cov

matrix results[`rep',1]=r(cov_12)

}

clear

svmat results

summThe output:

. set seed 1630

. matrix results=J(500,1,.)

. forval rep=1/500 {

2. clear

3. qui set obs 100

4. gen X=rnormal()

5. gen Y=0+X^3/10

6. qui corr X Y, cov

7. matrix results[`rep',1]=r(cov_12)

8. }

. clear

. svmat results

number of observations will be reset to 500

Press any key to continue, or Break to abort

number of observations (_N) was 0, now 500

. summ

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

results1 | 500 .3009726 .0977276 .0643365 .7326479In both cases I get an average sample covariance close to $0.3$ ( $0.298$ with $N=10$ and $0.301$ with $N=100$) but with the larger sample the simulated $\widehat{Cov}(X_{i},Y_{i})$ have a smaller standard deviation: of $0.098$ compared to $0.277$. I expect this standard deviation to decrease further as I increase $N$, because of the Law of Large Numbers.

4 : [35 points : Empirics]

Woodbury and Spiegelman (1987; available here) reports the results of two randomized experiments meant to encourage Unemployment Insurance (UI) recipients to return to work. In the Employer Experiment, an employer who employs a UI recipient for at least 4 months received a voucher worth \$500. In the Claimant Experiment (a.k.a. the Job-Search Incentive Experiment), any UI recipient finding employment for at least 4 months received \$500 directly.

(a) [4 points] Load the provided IlExp.dta dataset from this study into Stata. Use the $\texttt{describe}$ command to show a description of the variables in the dataset. Report a screenshot of the output.

(b) [7 points] Use the $\texttt{summarize}$ command to compute the means, standard deviations, etc of variables in the data. Report a screenshot of the output.

(c) [5 points] Based on your previous answer and the result of the $\texttt{count}$ command (which reports the total number of observations), which of the variables have missing data? Which variable has the most values missing, and what fraction of the total values is missing? Report a screenshot of the output used to answer these questions. How might missing data affect the interpretation of the results of the experiment?

(d) [8 points] Create a new “dummy” variable that indicates whether someone had any post-claim earnings. Compute summary stats including the mean and standard deviation separately by the three treatment arms, for the following variables: total benefits paid, age, pre-claim earnings, post-claim earnings, and the dummy variable for any post-claim earnings you just created. Report a screenshot of the output. Which treatment arm has the highest post-claim earnings? Which arm has the highest fraction of people with any post-claim earnings?

(e) [6 points] Write a few sentences about how economic reasoning might explain the differences in earnings described above across the treatment arms.

(f) [5 points] Submit clean and well-commented code used for this question.

Solution to (a)

use IlExp, clear

describeThe output:

. use IlExp, clear

. describe

Contains data from IlExp.dta

obs: 12,101

vars: 17 10 Jan 2014 17:52

size: 822,868

------------------------------------------------------------------------------------------------------------

storage display value

variable name type format label variable label

------------------------------------------------------------------------------------------------------------

age float %9.0g claimant age

benpdbye float %9.0g benefits paid, full benefit year

black float %9.0g claimant is black

control float %9.0g control group

exstbeny float %9.0g exhausted benefits (benefit year)

hie float %9.0g hiring incentive experiment group

hispanic float %9.0g claimant is hispanic

jsie float %9.0g job search incentive experiment group

male float %9.0g claimant is male

natvamer float %9.0g claimant is native american

otherace float %9.0g claimant is of other race

pospearn float %9.0g claimant post-claim earnings

prepearn float %9.0g claimant pre-claim earnings

white float %9.0g claimant is white

wkspdbye float %9.0g weeks of benefits, benefit year

treat float %9.0g

jsipart float %9.0g claimant participated in jsi (artificial data created in 2014)

------------------------------------------------------------------------------------------------------------

Sorted by:

Solution to (b)

summarizeThe output:

. summarize

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

age | 12,101 33.00083 8.926023 20 54

benpdbye | 12,101 2698.75 2083.071 0 8151

black | 12,101 .2591521 .4381874 0 1

control | 12,101 .3265846 .4689832 0 1

exstbeny | 12,101 .4564912 .498124 0 1

-------------+---------------------------------------------------------

hie | 12,101 .3274936 .4693184 0 1

hispanic | 12,101 .0754483 .2641243 0 1

jsie | 12,101 .3459218 .4756875 0 1

male | 12,101 .5495414 .4975602 0 1

natvamer | 12,101 .0074374 .0859226 0 1

-------------+---------------------------------------------------------

otherace | 12,101 .0146269 .1200589 0 1

pospearn | 11,861 1749.021 2233.563 0 66466

prepearn | 11,862 3631.45 2709.897 0 55000

white | 12,101 .6433353 .4790344 0 1

wkspdbye | 12,101 19.54326 12.19206 0 48

-------------+---------------------------------------------------------

treat | 12,101 .6734154 .4689832 0 1

jsipart | 12,101 .2914635 .4544553 0 1Solution to (c)

From the count command or the screenshot in (a), we see that the total number of observations is $12,101$. So only the earnings variables ($\emph{pospearn}$ and $\emph{prepearn}$) have missing values. The post-earnings variable $\emph{pospearn}$ has the most missing: the data is non-missing in 11,861/12,101 of cases, so about 2% are missing. Missing values of earnings could be important because we care about earnings differences across treatment arms, but we may only have a selected sample of earnings. However, since only 2% of earnings are missing, we hope that this selection bias will be small.

Solution to (d)

gen anypostearnings=pospearn>0

replace anypostearnings=. if pospearn==.

summ benpdbye age prepearn pospearn anypostearnings if control == 1

summ benpdbye age prepearn pospearn anypostearnings if hie == 1

summ benpdbye age prepearn pospearn anypostearnings if jsie == 1The output:

. gen anypostearnings=pospearn>0

. replace anypostearnings=. if pospearn==.

(240 real changes made, 240 to missing)

. summ benpdbye age prepearn pospearn anypostearnings if control == 1

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

benpdbye | 3,952 2785.891 2096.248 0 8073

age | 3,952 32.9795 8.8693 20 54

prepearn | 3,866 3640.385 2700.1 0 55000

pospearn | 3,866 1692.786 2036.887 0 15664

anypostear~s | 3,866 .7956544 .4032748 0 1

. summ benpdbye age prepearn pospearn anypostearnings if hie == 1

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

benpdbye | 3,963 2724.943 2094.621 0 8151

age | 3,963 33.09866 9.052213 20 54

prepearn | 3,878 3622.949 2648.758 0 34462

pospearn | 3,878 1731.958 2113.525 0 23621

anypostear~s | 3,878 .7880351 .4087528 0 1

. summ benpdbye age prepearn pospearn anypostearnings if jsie == 1

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

benpdbye | 4,186 2591.682 2055.308 0 8151

age | 4,186 32.92833 8.860157 20 54

prepearn | 4,118 3631.068 2775.832 0 50260

pospearn | 4,117 1817.899 2502.684 0 66466

anypostear~s | 4,117 .802769 .3979565 0 1Individuals in the job-search incentive group have the highest post-claim earnings and the highest rate of any post-period earnings. Differences in pre-claim earnings are much smaller across the groups than differences in post-claim earnings.

Solution to (e)

The job-search incentive treatment arm provided additional incentives for people to work, and so we might expect people to search harder under this treatment and thus have higher earnings. We might have also expected the employer-benefit incentive to make workers more desirable to hire and thus increase earnings as well. At least based on the means, this experiment does not appear to have been as effective, however. The fact that pre-claim earnings are similar across groups speaks to the success of the randomization protocol.

Solution to (f)

Homework1.do

* Part (a)

use IlExp, clear

desc

* Part (b)

summ

* Part (d)

gen anypostearnings=pospearn>0

replace anypostearnings=. if pospearn==.

summ benpdbye age prepearn pospearn anypostearnings if control == 1

summ benpdbye age prepearn pospearn anypostearnings if hie == 1

summ benpdbye age prepearn pospearn anypostearnings if jsie == 1Homework 2

1 : [30 points : Theory]

Suppose we are interested in whether workers are less productive on days when there is more air pollution. We are lucky enough to have identified a sample of days $i$ where pollution $X_{i}^{*}$ is plausibly as-good-as-randomly assigned with respect to latent worker productivity, and we think the linear model

\begin{align} Y_{i} & =\mu+\tau X_{i}^{*}+\epsilon_{i} \end{align} gives the causal effect $\tau$ on average worker productivity $Y_{i}$. Unfortunately, we do not measure pollution directly. Instead, we observe a noisy measure \begin{align} X_{i} & =X_{i}^{*}+\nu_{i} \end{align} We assume the ”measurement error” $\nu_{i}$ is idiosyncratic, in the sense of $Cov(\nu_{i},X_{i}^{*})=Cov(\nu_{i},\epsilon_{i})=0$, and that it is mean zero: $E[\nu_{i}]=0$.

(a) [5 points] Write down the formula for the slope coefficient from the bivariate population regression of $Y_{i}$ on $X_{i}^{*}$. Plug the model (1) into this formula, and simplify to show that this coefficient identifies $\tau$ if and only if $Cov(X_{i}^{*},\epsilon_{i})=0$ [this is how we’ll formalize ”as-good-as-random assignment” here].

(b) [9 points] Suppose $Cov(X_{i}^{*},\epsilon_{i})=0$. Write down the formula for the slope coefficient from the bivariate population regression of $Y_{i}$ on $X_{i}$. Plug the model (1) and the measurement equation (2) into this formula and simplify to show that as-good-as-random assignment is not enough to identify $\tau$ when the regressor is measured with error.

(c) [7 points] How does the sign of the slope coefficient in (b) compare to $\tau$? How do their magnitudes compare? If we were to reject the null hypothesis of an insignificant slope coefficient, could we feel confident that $\tau\neq0$?

(d) [9 points] Now suppose we fix our pollution measurement device so we record $X_{i}^{*}$ in our data without error. However, we discovered a bug in our code generating the average worker productivity measure. Rather than $Y_{i}$, we are actually only able to observe a noisy outcome $\tilde{Y}_{i}=Y_{i}+\eta_{i}$ where we again assume idiosyncratic noise, $E[\eta_{i}]=Cov(\eta_{i},X_{i}^{*})=Cov(\eta_{i},\epsilon_{i})=0$. Write down the formula for the slope coefficient from the bivariate population regression of $\tilde{Y}_{i}$ on $X_{i}^{*}$. Plug the model and the new measurement equation into this formula and simplify to show that the coefficient identifies $\tau$ when $X_{i}^{*}$ is as-good-as-randomly assigned. Show, in other words, that measurement error ”on the left” does not introduce bias (unlike measurement error ”on the right,” as you showed in (b)).

Solution to (a)

The slope coefficient is given by\begin{align*}\beta^{*} & =\frac{Cov(Y_{i},X_{i}^{*})}{Var(X_{i}^{*})}\\ & =\frac{Cov(\mu+\tau X_{i}^{*}+\epsilon_{i},X_{i}^{*})}{Var(X_{i}^{*})}\\ & =\frac{Cov(\mu,X_{i}^{*})+\tau Cov(X_{i}^{*},X_{i}^{*})+Cov(\epsilon_{i},X_{i}^{*})}{Var(X_{i}^{*})}\\ & =\tau+\frac{Cov(\epsilon_{i},X_{i}^{*})}{Var(X_{i}^{*})}\end{align*}where we plug the model in for the second equality, use linearity for the third equality, and use the facts that $Cov(\mu,X_{i}^{*})=0$ and $Cov(X_{i}^{*},X_{i}^{*})=Var(X_{i}^{*})$ for the fourth equality. This shows $\beta^{*}=\tau$ if and only if $Cov(\epsilon_{i},X_{i}^{*})=0$.

Solution to (b)

The slope coefficient is given by\begin{align*}\beta & =\frac{Cov(Y_{i},X_{i})}{Var(X_{i})}\\ & =\frac{Cov(\mu+\tau X_{i}^{*}+\epsilon_{i},X_{i}^{*}+\nu_{i})}{Var(X_{i}^{*}+\nu_{i})}\\ & =\frac{Cov(\mu,X_{i}^{*})+\tau Cov(X_{i}^{*},X_{i}^{*})+Cov(\epsilon_{i},X_{i}^{*})+Cov(\mu,\nu_{i})+\tau Cov(X_{i}^{*},\nu_{i})+Cov(\epsilon_{i},\nu_{i})}{Var(X_{i}^{*})+Var(\nu_{i})}\\ & =\tau\frac{Var(X_{i}^{*})}{Var(X_{i}^{*})+Var(\nu_{i})}\end{align*}where we plug both the model and the measurement equation in for the second equality, use linearity for the third equality, and use the given facts to arrive at the fourth equality. This shows $\beta\neq\tau$ generally; with $Var(X_{i}^{*})>0$ and $Var(\nu_{i})>0$ we have $\beta=\tau\kappa$ for $\kappa\in(0,1)$.

Solution to (c)

The above formula shows that $\beta$ and $\tau$ have the same sign, but that the former estimand is \emph onattenuated \emph defaultrelative to the latter parameter. That is, $|\beta|<|\tau|$. Thus if we can reject the null hypothesis of $\beta=0$ we can feel confident that $\tau\neq0$ as well, though we don’t know how much bigger it is (in absolute value) than $\beta$.

Solution to (d)

We now have \begin{align*}\tilde{\beta} & =\frac{Cov(\tilde{Y}_{i},X_{i}^{*})}{Var(X_{i}^{*})}\\ & =\frac{Cov(\mu+\tau X_{i}^{*}+\epsilon_{i}+\eta_{i},X_{i}^{*})}{Var(X_{i}^{*})}\\ & =\frac{Cov(\mu,X_{i}^{*})+\tau Cov(X^{*},X_{i}^{*})+Cov(\epsilon_{i},X_{i}^{*})+Cov(\eta_{i},X_{i}^{*})}{Var(X_{i}^{*})}\\ & =\tau\end{align*}So the causal parameter $\tau$ is indeed identified by the regression slope $\beta$ in this case.

2 : [25 points : Theory]

In class we showed that the slope coefficient $\widehat{\beta}$ in a bivariate OLS regression has the asymptotic distribution of:

\begin{align*}\sqrt{N}(\hat{\beta}-\beta) & \rightarrow_{d}\mathrm{N}(0,\sigma^{2})\end{align*}

where \begin{align}\sigma^{2} & =\dfrac{Var((X_{i}-E[X_{i}])\epsilon_{i})}{Var(X_{i})^{2}}\end{align} for $\epsilon_{i}=Y_{i}-(\alpha+X_{i}\beta)$ with $\alpha$ and $\beta$ being the coefficients in the population bivariate regression of $Y_{i}$on~$X_{i}$. This question will teach you about homoskedasticity and heteroskedasticity. By definition, $\epsilon_{i}$ is $\emph{homoskedastic}$ if $Var(\epsilon_{i}|X_{i}=x)=\omega^{2}$ for all $x$; that is, when the conditional variance of $\epsilon_{i}$ given $X_{i}$ doesn’t depend on $X_{i}$. Otherwise, $\epsilon_{i}$ is said to be $\emph{heteroskedastic}$.

(a) [6 points] Show that if $\epsilon_{i}$ is homoskedastic, then $Var(Y_{i}|X_{i}=x)$ doesn’t depend on $x$. [Hint: remember that $Var[a+Y]=Var[Y]$, and when we have conditional expectations/variances we can treat functions of $X_{i}$ like constants]

(b) [6 points] Say $Y_{i}$ is earnings and $X_{i}$ is an indicator for college attainment. In light of the fact that we showed in the previous question, what would homoskedasticity imply about the variance of earnings for college and non-college workers? Do you think this is likely to hold in practice?

(c) [9 points] Show that if $\epsilon_{i}$ is homoscedastic and $E[\epsilon_{i}|X_{i}]=0$ (as occurs when the CEF is linear), then $\sigma^{2}=\frac{\omega^{2}}{Var(X_{i})}$. [Hint: you may use the fact that $E[\epsilon_{i}]=E[X_{i}\epsilon_{i}]=0$, which we derived in class.]

(d) [4 points] Due to some unfortunate historical circumstances, the default regression command in Stata (and R) reports standard errors based on the assumption of homoskedasticity, following the formula you derived in part (c). There is essentially no good reason to use standard errors assuming homoskedasticity. If you type ”reg y x, robust”, then Stata gives you standard errors based on the formula (3); these are sometimes called heteroskedasticity-robust standard errors. You should always remember to type the ”, robust” option in Stata (this can be abbreviated to ”, r”)$^1$. Please write the sentence, ”I will not forget to use the ‘, r’ option for robust standard errors” five times. [This is not a trick question — I just really want you to remember this!]

- Even very smart people like Nate Silver forget to do this sometimes.

Solution to (a)

Recall that $\epsilon_{i}=Y_{i}-\alpha-X_{i}\beta$. Hence $Var(\epsilon_{i}\mid X_{i})=Var(Y_{i}-\alpha-X_{i}\beta\mid X_{i})=Var(Y_{i}\mid X_{i})$. This means if $Var(\epsilon_{i}\mid X_{i})$ doesn’t depend on $X_{i}$, neither does $Var(Y_{i}\mid X_{i})$.

Solution to (b)

Homoskedasticity would imply that the variance of earnings is the same for college-educated and non-college educated workers. This seems unlikely to hold in practice. For instance, the distribution of earnings for college earnings has a much longer right tail and likely has higher variance.

Solution to (c)

We showed in class that $E[\epsilon_{i}]=E[X_{i}\epsilon_{i}]=0$. This implies that $E[(X_{i}-E[X_{i}])\epsilon_{i}]=E[X_{i}\epsilon_{i}]-E[X_{i}]E[\epsilon_{i}]=0$. Hence, $Var((X_{i}-E[X_{i}])\epsilon_{i})=E[(X_{i}-E[X_{i}])^{2}\epsilon_{i}^{2}]$. We then see that \begin{align*} Var((X_{i}-E[X_{i}])\epsilon_{i}) & =E[(X_{i}-E[X_{i}])^{2}\epsilon_{i}^{2}]\\ & =E[E[(X_{i}-E[X_{i}])^{2}\epsilon_{i}^{2}|X_{i}]]\text{ (Law of iterated expectation) }\\ & =E[(X_{i}-E[X_{i}])^{2}E[\epsilon_{i}^{2}|X_{i}]]\\ & =E[(X_{i}-E[X_{i}])^{2}Var[\epsilon_{i}|X_{i}]]\text{ (Since }Var[\epsilon_{i}|X_{i}]=E[\epsilon_{i}^{2}|X_{i}]-E[\epsilon_{i}|X_{i}]^{2}=E[\epsilon_{i}^{2}|X_{i}]\text{) }\\ & =E[(X_{i}-E[X_{i}])^{2}]\omega^{2}\text{ (Since }Var[\epsilon_{i}|X_{i}]=\omega^{2}\text{ by assumption )}\\ & =Var(X_{i})\omega^{2}\end{align*}

Plugging into the formula for $\sigma^{2}$, we obtain $\omega^{2}/Var(X_{i})$ as desired.

Solution to (d)

I will not forget to use the `, r’ option for robust standard errors.

I will not forget to use the `, r’ option for robust standard errors.

I will not forget to use the `, r’ option for robust standard errors.

I will not forget to use the `, r’ option for robust standard errors.

I will not forget to use the `, r’ option for robust standard errors.

3 : [45 points : Empirics]

Let’s once again use the Woodbury and Spiegelman (1987) data, now with some regression.

(a) [7 points] Restrict your analysis to the job-search incentive and the control group. Regress postclaim earnings on a constant and an indicator for being in the job-search incentive group (don’t forget your answer to 2(d) above!). Report a screenshot of your results.

(b) [5 points] How does the intercept estimate from your regression in part (a) compare to your estimate of the control group mean from the previous problem set? What about its confidence interval?

(c) [5 points] How does the estimated coefficient on being in the job-search group from your regression in part (a) compare to your estimate of the treatment effect from the previous problem set (i.e. the difference in post earnings across treatment and control groups)? What about its confidence interval?

(d) [7 points] Re-run the regression in part (a) but without using the ‘, robust’ option (never do this again!). Report a screenshot of your results. Discuss any changes in coefficients and standard errors.

(e) [7 points] Re-run the regression in part (a) but with the ”black” indicator included as a control. Report a screenshot of your results. Explain intuitively why it makes sense that the slope coefficient doesn’t really change with this control [hint: remember we are analyzing an experiment].

(f) [9 points] Re-run the regression in part (e) but including an interaction} variable which multiplies the ”black” indicator with the job-search incentive treatment indicator. Report a screenshot of your results. What is the regression estimate of the treatment effect for non-black individuals? What is the regression estimate of the treatment effect for black individuals? Is the difference in estimated effects statistically significant?

(g) [5 points] Submit clean and well-commented code used for this question.

Solution to (a)

use IlExp.dta, clear

gen touse = inlist(1, control, jsie)

reg pospearn jsie if touse, r The output:

. use IlExp.dta, clear

. gen touse = inlist(1, control, jsie)

. reg pospearn jsie if touse, r

Linear regression Number of obs = 7,983

F(1, 7981) = 6.03

Prob > F = 0.0141

R-squared = 0.0007

Root MSE = 2289

------------------------------------------------------------------------------

| Robust

pospearn | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

jsie | 125.1129 50.93661 2.46 0.014 25.26381 224.9619

_cons | 1692.786 32.75927 51.67 0.000 1628.569 1757.003

------------------------------------------------------------------------------Solution to (b)

The intercept coincides perfectly with the estimated mean of the control group. Standard errors (and hence confidence intervals) are almost identical.

Solution to (c)

Again, the estimated coefficient coincides exactly with the treatment effect estimated in PS2. Standard errors (and hence confidence intervals) are almost identical.

Solution to (d)

reg pospearn jsie if touseThe output:

. reg pospearn jsie if touse

Source | SS df MS Number of obs = 7,983

-------------+---------------------------------- F(1, 7981) = 5.96

Model | 31209051.7 1 31209051.7 Prob > F = 0.0147

Residual | 4.1816e+10 7,981 5239417.04 R-squared = 0.0007

-------------+---------------------------------- Adj R-squared = 0.0006

Total | 4.1847e+10 7,982 5242670.57 Root MSE = 2289

------------------------------------------------------------------------------

pospearn | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

jsie | 125.1129 51.2629 2.44 0.015 24.62419 225.6016

_cons | 1692.786 36.81379 45.98 0.000 1620.621 1764.951

------------------------------------------------------------------------------The coefficients are identical, as expected, but now the standard errors are different (they are no longer robust but instead calculated by the homoskedastic formula above). Somewhat surprisingly here, the homoskedastic standard errors are a bit larger than the heteroskedastic ones (we usually expect the opposite).

Solution to (e)

reg pospearn jsie black if touse, rThe output:

. reg pospearn jsie black if touse, r

Linear regression Number of obs = 7,983

F(2, 7980) = 54.95

Prob > F = 0.0000

R-squared = 0.0103

Root MSE = 2278.2

------------------------------------------------------------------------------

| Robust

pospearn | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

jsie | 115.156 50.59379 2.28 0.023 15.97893 214.333

black | -511.5598 49.17525 -10.40 0.000 -607.9561 -415.1634

_cons | 1829.608 36.83119 49.68 0.000 1757.409 1901.807

------------------------------------------------------------------------------

The randomized treatment variable should be uncorrelated with all predetermined characteristics of individuals (just as we expect it to be uncorrelated with potential outcomes). Thus none of these characteristics are a source of bias, since adding them to the simple treatment regression has no effect on the estimated coefficient.

Solution to (f)

gen jsie_black=jsie*black

reg pospearn jsie jsie_black black if touse, rThe output:

. gen jsie_black=jsie*black

. reg pospearn jsie jsie_black black if touse, r

Linear regression Number of obs = 7,983

F(3, 7979) = 37.18

Prob > F = 0.0000

R-squared = 0.0103

Root MSE = 2278.3

------------------------------------------------------------------------------

| Robust

pospearn | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

jsie | 123.2174 62.92546 1.96 0.050 -.1329608 246.5677

jsie_black | -31.27074 98.28245 -0.32 0.750 -223.93 161.3885

black | -495.8183 65.71593 -7.54 0.000 -624.6387 -366.9979

_cons | 1825.398 40.2122 45.39 0.000 1746.571 1904.224

------------------------------------------------------------------------------The regression estimate of the treatment effect for non-black individuals is given by the treatment main effect (at 123.2) since this approximates the effect of the treatment on the outcome when the black indicator is zero. The regression estimate of the treatment effect for black individuals is given by the sum of this main effect and the interaction effect (so 91.9=123.2-31.3) since this approximates the effect of the treatment on the outcome when the black indicator is one. The interaction effect thus gives the difference in estimated effects. With a p-value of 0.75, it is far from statistically significant.

Solution to (g)

Homework2.do

* Part (a)

use IlExp.dta, clear

gen touse = inlist(1, control, jsie)

reg pospearn jsie if touse, r

* Part (d)

reg pospearn jsie if touse

* Part (e)

reg pospearn jsie black if touse, r

* Part (f)

gen jsie_black=jsie*black

reg pospearn jsie jsie_black black if touse, rHomework 3

1 : [32 points : Theory]

You observe an $\emph{iid }$sample of data $(Y_{i},L_{i},K_{i})$ across a set of manufacturing firms $i$. Here $Y_{i}$ denotes the output (e.g. total sales) of the firm in some period, $L_{i}$ measures the labor input (e.g. total wage bill) of the firm in this period, and $K_{i}$ measures the capital input (e.g. total value of machines and other assets) of the firm in this period. We are interested in estimating a $\emph{production function}$: i.e. the structural relationship $\emph{determining}$ a firm’s ability to produce output given a set of inputs.

(a) [6 points] Suppose you estimate a regression of $\ln Y_{i}$ on $\ln L_{i}$ and $\ln K_{i}$ (and a constant), where $\ln$ denotes the natural log. Explain how you would interpret the estimated coefficients on $\ln L_{i}$ and $\ln K_{i}$, without making any assumptions on the structural relationship.

(b) [8 points] Now suppose you assume a Cobb-Douglas production function: $Y_{i}=Q_{i}L_{i}^{\alpha}K_{i}^{\beta}$ for some parameters $(\alpha,\beta)$, where $Q_{i}$ denotes the (unobserved) productivity of firm $i$. Suppose we assume productivity shocks are as-good-as-random across firms: i.e. that $Q_{i}$ is independent of $(L_{i},K_{i})$. Show that under this assumption the regression estimated in (a) identifies $\alpha$ and $\beta$.

(c) [8 points] Suppose we further assume constant returns-to-scale: $\alpha+\beta=1$. Show that a bivariate regression of $\ln(Y_{i}/L_{i})$ on $\ln(K_{i}/L_{i})$ (and a constant) identifies the production function parameters, maintaining the independence assumption in (b). How could we test the constant-returns-to-scale assumption here?

(d) [10 points] Let’s now weaken the as-good-as-random assignment assumption in (b). Suppose we model $Q_{i}=S_{i}^{\theta}\epsilon_{i}$ where $S_{i}$ denotes the observed size of firm $i$, $\theta$ is a parameter governing the relationship between firm size and productivity, and $\epsilon_{i}$ is a productivity shock that is independent of $(S_{i},L_{i},K_{i})$. Specify a regression which identifies $\beta$ and $\theta$ under this assumption, maintaining the assumption of $\alpha+\beta=1$. Do you expect the regression estimated in (c) to overstate or understate $\beta$, given the new model?

Solution to (a)

The regression \begin{align*}\ln Y_{i} & =\gamma_{0}+\gamma_{1}\ln L_{i}+\gamma_{2}\ln K_{i}+U_{i}\end{align*}gives a linear approximation of the CEF $E[\ln Y_{i}\mid\ln L_{i},\ln K_{i}]$ absent any assumptions on the structural production function. We can interpret $\gamma_{1}$ as the approximate partial derivative of this CEF with respect to $\ln L_{i}$ and $\gamma_{2}$ as the approximate partial derivative with respect to $\ln K_{i}$. As discussed in class, these parameters have the interpretation of an elasticity: $\gamma_{1}$ approximates the percentage change in output per percentage increase in labor across firms (holding capital fixed), while $\gamma_{2}$ approximates the percentage change in output per percentage increase in capital across firms (holding labor fixed).

Solution to (b)

Under the Cobb-Douglas model, \begin{align*}\ln Y_{i} & =\ln(Q_{i}L_{i}^{\alpha}K_{i}^{\beta})\\ & =\ln Q_{i}+\alpha\ln L_{i}+\beta\ln K_{i}.\end{align*}If $Q_{i}$ is independent of $(L_{i},K_{i})$, then $\ln Q_{i}$ is independent of $\ln L_{i}$ and $\ln K_{i}$. In particular, the conditional expectation \begin{align*}E[\ln Y_{i}\mid\ln L_{i},\ln K_{i}] & =E[\ln Q_{i}\mid\ln L_{i},\ln K_{i}]+\alpha\ln L_{i}+\beta\ln K_{i}\\ & =E[\ln Q_{i}]+\alpha\ln L_{i}+\beta\ln K_{i}\end{align*}is linear in $\ln L_{i}$ and $\ln K_{i}$. This means that the regression in (a) identifies $\alpha$ and $\beta$ as the coefficients of this regression under this model and assumption.

Solution to (c)

If we assume $\alpha+\beta=1$ then $\alpha=1-\beta$ and our model becomes \begin{align*}\ln Y_{i} & =\ln Q_{i}+(1-\beta)\ln L_{i}+\beta\ln K_{i}\\ & =\ln Q_{i}+\ln L_{i}+\beta(\ln K_{i}-\ln L_{i})\end{align*}Since $\ln(Y_{i}/L_{i})=\ln Y_{i}-\ln L_{i}$, this means \begin{align*}E[\ln(Y_{i}/L_{i})\mid\ln L_{i},\ln K_{i}] & =E[\ln Y_{i}\mid\ln L_{i},\ln K_{i}]-\ln L_{i}\\ & =E[\ln Q_{i}]+\beta(\ln K_{i}-\ln L_{i}).\end{align*}So, as before, the conditional expectation $E[\ln(Y_{i}/L_{i})\mid\ln L_{i},\ln K_{i}]$ is linear in $\ln K_{i}-\ln L_{i}=\ln(K_{i}/L_{i})$. This means the slope coefficient in a bivariate regression of $\ln(Y_{i}/L_{i})$ on $\ln(K_{i}/L_{i})$ identifies $\beta$, and since we know $\alpha=1-\beta$ this parameter is also identified. To test constant returns-to-scale we could regress $\ln Y_{i}$ on $\ln L_{i}$ and $\ln K_{i}$ and use the lincom command in stata to check whether the sum of their coefficients is one.

Solution to (d)

The model is now $Y_{i}=L_{i}^{1-\beta}K_{i}^{\beta}S_{i}^{\theta}\epsilon_{i}$, implying \begin{align*}\ln Y_{i} & =\ln(L_{i}^{1-\beta}K_{i}^{\beta}S_{i}^{\theta}\epsilon_{i})\\ & =(1-\beta)\ln L_{i}+\beta\ln K_{i}+\theta\ln S_{i}+\ln\epsilon_{i}\\\ln Y_{i}-\ln L_{i} & =\beta(\ln K_{i}-\ln L_{i})+\theta\ln S_{i}+\ln\epsilon_{i}\\\ln(Y_{i}/L_{i}) & =\beta\ln(K_{i}/L_{i})+\theta\ln S_{i}+\ln\epsilon_{i}\end{align*}Similar to before, we have \begin{align*}E[\ln(Y_{i}/L_{i})\mid\ln L_{i},\ln K_{i},\ln S_{i}] & =\beta\left(\ln(K_{i}/L_{i})\right)+\theta\ln S_{i}+E[\ln\epsilon_{i}\mid\ln L_{i},\ln K_{i},\ln S_{i}]\\ & =E[\ln\epsilon_{i}]+\beta\ln(K_{i}/L_{i})+\theta\ln S_{i}\end{align*}using the independence of $\epsilon_{i}$ from $(S_{i},L_{i},K_{i})$, which implies the independence of $\ln\epsilon_{i}$ from $(\ln S_{i},\ln L_{i},\ln K_{i})$. This means that a regression of log output/labor on log capital/labor and log firm size identifies the production function parameters $(\beta,\theta)$. The regression model which omits log firm size will generally be \begin_inset Quotes eldbiased\begin_inset Quotes erd (in the sense of an identification failure, not the statistical sense). Specifically, it will identify \begin{align*}\frac{Cov\left(\ln(Y_{i}/L_{i}),\ln(K_{i}/L_{i})\right)}{Var\left(\ln(K_{i}/L_{i})\right)} & =\frac{Cov\left(\beta\ln(K_{i}/L_{i})+\theta\ln S_{i}+\ln\epsilon_{i},\ln(K_{i}/L_{i})\right)}{Var\left(\ln(K_{i}/L_{i})\right)}\\ & =\beta+\theta\frac{Cov\left(\ln S_{i},\ln(K_{i}/L_{i})\right)}{Var\left(\ln(K_{i}/L_{i})\right)}\end{align*}I would expect $\theta>0$, i.e. for larger firms to be more productive holding capital and labor fixed. I have less of a solid sense of the sign of $Cov\left(\ln S_{i},\ln(K_{i}/L_{i})\right)$, but one might imagine that more capital-intensive firms are larger because they have more ability to pay the fixed costs to invest in things like fancy machinery or buildings. In this case $Cov\left(\ln S_{i},\ln(K_{i}/L_{i})\right)>0$ and so the regression in (c) will generally overstate $\beta$. If you told a story for why $Cov\left(\ln S_{i},\ln(K_{i}/L_{i})\right)<0$ then you might conclude that there is a downward bias in (c).

2 : [32 points : Theory]

Suppose we are interested in estimating the (potentially different) employment effects of minimum wage increases for high school dropouts and high school graduates. As in Card and Krueger (1994), we observe employment outcomes for a sample of individuals of both educational groups in New Jersey and Pennslyvania, before and after the New Jersey minimum wage increase. Let $Y_{it}$ denote the employment status of individual $i$ at time $t$, let $D_{i}\in\{0,1\}$ indicate an individual’s residence in New Jersey (asuming nobody moves between the two time periods), and let $Post_{t}\in\{0,1\}$ indicate the latter time period. Furthermore let $Grad_{i}\in\{0,1\}$ indicate high school graduation. Consider the regression of \begin{align}Y_{it}= & \mu+\alpha D_{i}+\tau Post_{t}+\gamma Grad_{i}+\beta D_{i}Post_{t}\\ & +\lambda Post_{t}Grad_{i}+\psi D_{i}Grad_{i}+\pi D_{i}Post_{t}Grad_{i}+\upsilon_{it}.\nonumber \end{align}

Note in that this regression includes all ‘‘main effects” ($D_{i}$, $Post_{t}$, and $Grad_{i}$), all two-way interactions ($D_{i}Post_{t}$, $Post_{t}Grad_{i}$, and $D_{i}Grad_{i}$) as well as the three-way interaction $D_{i}Post_{t}Grad_{i}$.

(a) [7 Points] Suppose we regress $Y_{it}$ on $D_{i}$, $Post_{t}$, and $D_{i}Post_{t}$ in the sub-sample of high school dropouts (with $Grad_{i}=0$). Derive the coefficients for this sub-sample regression in terms of the coefficients in the full-sample regression (1). Repeat this exercise for the saturated regression of $Y_{it}$ on $D_{i}$, $Post_{t}$, and $D_{i}Post_{t}$ in the sub-sample of high school graduates (with $Grad_{i}=1$): what do the coefficients for this sub-sample regression equal, in terms of the coefficients in (4)?

(b) [8 Points] Extending what we saw in lecture, state assumptions under which these two sub-sample regressions (in the $Grad_{i}=0$ and $Grad_{i}=1$ subsamples) identify the causal effects of minimum wage increases on employment for high school dropouts and graduates, respectively. Prove your claims.

(c) [7 Points] Under the assumptions in (b), which coefficient in (4) yields a test for whether the minimum wage effects for high school dropouts and graduates differ? Use your answers in (a).

(d) [10 Points] Suppose New Jersey and Pennslyvania were on different employment trends when the minimum wage was increased, such that your assumptions in (b) fail. However, suppose the $\emph{difference}$ in employment trends across states is the $\emph{same}$ for high school dropouts and graduates. Show that under this weaker assumption the coefficient from (c) still identifies the difference in minimum wage effects across the groups.

Solution to (a)

In the $Grad_{i}=0$ sub-sample, we obtain \begin{align*}Y_{it} & =\mu+\alpha D_{i}+\tau Post_{t}+\beta D_{i}Post_{t}+u_{it},\end{align*}since the coefficients from these terms in (1) fit the elements of $E[Y_{it}\mid D_{i},Post_{t},Grad_{t}=0]$. In the $Grad_{i}=1$ sub-sample, we obtain \begin{align*}Y_{it} & =(\gamma+\mu)+(\alpha+\psi)D_{i}+(\tau+\lambda)Post_{t}+(\beta+\pi)D_{i}Post_{t}+v_{it},\end{align*}by the same logic.

Solution to (b)

Suppose, for each $g\in\{0,1\}$, \begin{align*}E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=g] & =E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=0,Grad_{i}=g],\end{align*}where we use the potential outcomes notation from class. Under these parallel trends assumptions we have \begin{align*}\beta & =E[Y_{i2}-Y_{i1}\mid D_{i}=1,Grad_{i}=0]-E[Y_{i2}-Y_{i1}\mid D_{i}=0,Grad_{i}=0]\\ & =E[Y_{i2}(1)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=0]-E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=0,Grad_{i}=0]\\ & =E[Y_{i2}(1)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=0]-E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=0]\\ & =E[Y_{i2}(1)-Y_{i2}(0)\mid D_{i}=1,Grad_{i}=0],\end{align*}following the proof in the lecture slides. Similarly, \begin{align*}\beta+\pi & =E[Y_{i2}-Y_{i1}\mid D_{i}=1,Grad_{i}=1]-E[Y_{i2}-Y_{i1}\mid D_{i}=0,Grad_{i}=1]\\ & =E[Y_{i2}(1)-Y_{i2}(0)\mid D_{i}=1,Grad_{i}=1].\end{align*}

Solution to (c)

The difference we wish to test is \begin{align*} & E[Y_{i2}(1)-Y_{i2}(0)\mid D_{i}=1,Grad_{i}=1]-E[Y_{i2}(1)-Y_{i2}(0)\mid D_{i}=1,Grad_{i}=0]\\ & =(\beta+\pi)-\beta\\ & =\pi\end{align*}So we could test whether the coefficient on $D_{i}Grad_{i}Post_{t}$ in (1) is zero.

Solution to (d)

The “difference-in-difference-in-differences” (sometimes called “triple-diff”) regression coefficient gives \begin{align*}\pi\text{=} & E[Y_{i2}-Y_{i1}\mid D_{i}=1,Grad_{i}=1]-E[Y_{i2}-Y_{i1}\mid D_{i}=0,Grad_{i}=1]\\ & -\left(E[Y_{i2}-Y_{i1}\mid D_{i}=1,Grad_{i}=0]-E[Y_{i2}-Y_{i1}\mid D_{i}=0,Grad_{i}=0]\right)\\= & E[Y_{i2}(1)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=1]-E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=0,Grad_{i}=1]\\ & -\left(E[Y_{i2}(1)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=0]-E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=0,Grad_{i}=0]\right)\\= & \underbrace{E[Y_{i2}(1)-Y_{i2}(0)\mid D_{i}=1,Grad_{i}=1]-E[Y_{i2}(1)-Y_{i2}(0)\mid D_{i}=1,Grad_{i}=0]}_{\text{Parameter of interest}}\\ & +\underbrace{E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=1]-E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=0,Grad_{i}=1]}_{\text{Difference in trends for }{Grad_{i}=1}}\\ & -\left(\underbrace{E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=1,Grad_{i}=0]-E[Y_{i2}(0)-Y_{i1}(0)\mid D_{i}=0,Grad_{i}=0]}_{\text{Difference in trends for }Grad_{i}=0}\right),\end{align*}where the first equality uses the potential outcomes model and the second equality uses linearity of expectations and rearranges terms. The weaker assumption is that the two differences in trends are equal to each other (though not necessarily each zero). When this holds they cancel, and we are left with the parameter of interest.

3 : [36 points : Empirics]

In this problem, you will look at how Medicaid expansions impact insurance coverage using publicly-available data that is similar to the (confidential) data used in Carey et al. (2020), which we discussed in class. The attached dataset $\emph{ehec\_data.dta}$ contains state-level panel data that shows the fraction of low-income childless adults who have health insurance in each year. Start by loading this data into Stata.

(a) [4 points] Let’s first get a feel for the data. When you open a dataset, it’s good to use the $\texttt{browse}$ command, which shows you the raw data. This helps you see how the data is structured.

Run the command and report a screenshot of your results. Next, use the $\texttt{tab}$ command to tabulate the year variable. Report a screenshot of your results. For what years is data available?

(b) [4 points] The variable $\texttt{yexp2}$ shows the first year that a state expanded Medicaid under the Affordable Care Act, and is missing if a state never expanded Medicaid. Use the $\texttt{tab}$ command to figure out how many states in the data first expanded in each year, and report a screenshot of your result. How many states (in the data) first expanded in 2014? How many never expanded? Are all 50 states contained in the data? [Hint: you can use the ‘‘, missing” option to tabulate missing values. Since you have panel data, each state will appear multiple times in the data, so you will want to only tabulate for a fixed year (e.g. add ‘‘if year == 2009” option) so that each state only shows up once in your tabulations.]

(c) [5 points] As in Carey et al, we will focus on the first two years of Medicaid expansion, 2014 and 2015. To simplify matters, drop the 3 states who first expanded in 2015 for the remainder of the analysis (since these states are partially treated during the time we’re studying). Create a variable $\texttt{treatment}$ that is equal to 1 if a state expanded in 2014 and equal to 0 if a state never expanded or expanded after 2015. Tabulate your treatment variable (for a fixed year, as above) and make sure the number of treated and control states matches what you’d expect from your previous answers. Report a screenshot of your tabulate command.

(d) [6 points] Using observations from 2013 and 2014 $\textit{only}$, estimate the regression specification

\[Y_{it}=\beta_{0}+1[t=2014]\times\beta_{1}+treatment_{i}\times\beta_{2}+treatment_{i}\times1[t=2014]\times\beta_{3}+\epsilon_{it}\]

where $Y_{it}$ denotes the insurance coverage rate of state $i$ in year $t$. Cluster your standard errors by state using the ‘‘, cluster(stfips)” option (instead of the usual ‘‘, r”). What is your difference-in-differences estimate of the effect of Medicaid expansion on coverage? Is it significant?

(e) [7 points] One way to assess the plausibility of the key parallel trends assumption in difference-in-differences settings is to create an ‘‘event-study plot” that allows us to assess pre-treatment differences in trends. That is, we compare the trends for the two groups both before and after the treatment occurred. To do this, create the variable $\texttt{t2008}=\texttt{treatment}\times1[t=2008]$. Create analogous variables $\texttt{t2009},…,\texttt{t2019}$. Set $\texttt{t2013}$ to 0 for all observations [Note: this normalizes the coefficient on $\texttt{t2013}$, to 0. This is the same as omitting this variable from the regression, except including the zero variable in the regression in Stata makes it easier to plot the coefficients.] Regress $\texttt{dins}$ on fixed effects for year, fixed effects for state, and the variables $\texttt{t2008},…,\texttt{t2019}$ you just created. That is, use OLS to estimate the regression

\[Y_{it}=\phi_{i}+\lambda_{t}+\sum_{s\neq2013}1[t=s]\times treatment_{i}\times\beta_{s}+\epsilon_{is}\]

[Note: you can specify fixed effects in a regression specification by writing ‘‘i.stfips” for state fixed effects and ‘‘i.year” for year fixed effects.] Again, remember to cluster your standard errors at the state level. Install the $\texttt{coefplot}$ package by running ‘‘ssc install coefplot”. Then, run the command ‘‘coefplot, omitted keep(t2{*}) vertical” to create an event-study plot. Report a screenshot of both your regression results and the plot.

(f) [5 points] Use the $\texttt{test}$ command to test the joint null hypothesis that all of the pre-treament event-study coefficients, $\beta_{2008},…,\beta_{2012}$ are equal to zero. [Hint: the command ‘‘test x1 x2” runs an F-test for the joint hypothesis that the coefficients on x1 and x2 are both zero.] What is the $p$-value from this joint $F$-test? Does this increase your confidence in the parallel trends assumption?

(g) [5 points] Submit clean and well-commented code used for this question.

Solution to (a)

use ehec_data.dta, clear

browse

tab yearThe output:

. use ehec_data.dta, clear

. br

. tab year

Census/ACS |

survey year | Freq. Percent Cum.

------------+-----------------------------------

2008 | 46 8.33 8.33

2009 | 46 8.33 16.67

2010 | 46 8.33 25.00

2011 | 46 8.33 33.33

2012 | 46 8.33 41.67

2013 | 46 8.33 50.00

2014 | 46 8.33 58.33

2015 | 46 8.33 66.67

2016 | 46 8.33 75.00

2017 | 46 8.33 83.33

2018 | 46 8.33 91.67

2019 | 46 8.33 100.00

------------+-----------------------------------

Total | 552 100.00Data is available for all years from 2008 to 2019.

Solution to (b)

tab yexp2 if year == 2009, mThe output:

. tab yexp2 if year == 2009, m

Year of |

Medicaid |

Expansion | Freq. Percent Cum.

------------+-----------------------------------

2014 | 22 47.83 47.83

2015 | 3 6.52 54.35

2016 | 2 4.35 58.70

2017 | 1 2.17 60.87

2019 | 2 4.35 65.22

. | 16 34.78 100.00

------------+-----------------------------------

Total | 46 100.00We only have data for 46 states. Of these, 22 expanded in 2014, 8 expanded at some point in time after 2014, and 16 never expanded.

Solution to (c)

gen treatment = .

replace treatment = 1 if yexp2 == 2014

replace treatment = 0 if yexp2 >= 2016

drop if treatment == .

tab treatment if year==2008, mThe output:

. gen treatment = .

(552 missing values generated)

. replace treatment = 1 if yexp2 == 2014

(264 real changes made)

. replace treatment = 0 if yexp2 >= 2016

(252 real changes made)

. drop if treatment == .

(36 observations deleted)

. tab treatment if year==2008, m

treatment | Freq. Percent Cum.

------------+-----------------------------------

0 | 21 48.84 48.84

1 | 22 51.16 100.00

------------+-----------------------------------

Total | 43 100.0022 states expanded Medicare in 2014, while 21 expanded it in 2016 or later, or never. This coincides with what we would expect looking to the previous table.

Solution to (d)

gen y2014 = (year == 2014)

gen t_y2014 = y2014 * treatment

reg dins treatment y2014 t_y2014 if year == 2013 | year == 2014, cluster(stfips)The output:

. gen y2014 = (year == 2014)

. gen t_y2014 = y2014 * treatment

. reg dins treatment y2014 t_y2014 if year == 2013 | year == 2014, cluster(stfips)

Linear regression Number of obs = 86

F(3, 42) = 96.65

Prob > F = 0.0000

R-squared = 0.4586

Root MSE = .05336

(Std. Err. adjusted for 43 clusters in stfips)

------------------------------------------------------------------------------

| Robust

dins | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

treatment | .0396753 .0159493 2.49 0.017 .0074883 .0718622

y2014 | .0448456 .0060665 7.39 0.000 .0326029 .0570883

t_y2014 | .0464469 .0091256 5.09 0.000 .0280306 .0648631

_cons | .6227468 .009852 63.21 0.000 .6028648 .6426289

------------------------------------------------------------------------------I estimate a treatment effect of $\hat{\beta}_{3}\approx0.046$ with a clustered standard error of $0.009$; so it’s highly statistically significant.

Solution to (e)

forvalues yr = 2008/2019{

gen t`yr' = treatment * (year == `yr')

}

cap ssc install coefplot

replace t2013 = 0

reg dins t2008-t2012 t2013 t2014-t2019 i.year i.stfips, cluster(stfips)

coefplot, omitted keep(t2*) vertical

graph export DD_1.png, replaceThe output:

. forvalues yr = 2008/2019{

2. gen t`yr' = treatment * (year == `yr')

3. }

. cap ssc install coefplot

. replace t2013 = 0

(22 real changes made)

. reg dins t2008-t2012 t2013 t2014-t2019 i.year i.stfips, cluster(stfips)

note: t2013 omitted because of collinearity

Linear regression Number of obs = 516

F(21, 42) = .

Prob > F = .

R-squared = 0.9374

Root MSE = .0242

(Std. Err. adjusted for 43 clusters in stfips)

---------------------------------------------------------------------------------

| Robust

dins | Coef. Std. Err. t P>|t| [95% Conf. Interval]

----------------+----------------------------------------------------------------

t2008 | -.0052854 .0090566 -0.58 0.563 -.0235622 .0129915

t2009 | -.0112973 .0089213 -1.27 0.212 -.0293013 .0067066

t2010 | -.002676 .0074388 -0.36 0.721 -.017688 .012336

t2011 | -.0014193 .0066217 -0.21 0.831 -.0147825 .0119439

t2012 | .0003397 .0077351 0.04 0.965 -.0152705 .0159498

t2013 | 0 (omitted)

t2014 | .0464469 .009578 4.85 0.000 .0271176 .0657761

t2015 | .0692062 .010832 6.39 0.000 .0473463 .091066

t2016 | .0747343 .0117466 6.36 0.000 .0510288 .0984399

t2017 | .0642144 .012695 5.06 0.000 .0385948 .0898339

t2018 | .0618816 .0146892 4.21 0.000 .0322376 .0915256

t2019 | .0646171 .0130541 4.95 0.000 .0382728 .0909614

|

year |

2009 | -.0110171 .0041383 -2.66 0.011 -.0193686 -.0026657

2010 | -.0200235 .0049124 -4.08 0.000 -.0299371 -.0101098

2011 | -.0184424 .0054814 -3.36 0.002 -.0295044 -.0073804

2012 | -.0126684 .0043538 -2.91 0.006 -.0214547 -.0038822

2013 | -.006946 .0064585 -1.08 0.288 -.0199798 .0060877

2014 | .0378995 .0042739 8.87 0.000 .0292745 .0465246

2015 | .0694425 .0081728 8.50 0.000 .0529492 .0859358

2016 | .0848653 .0089196 9.51 0.000 .0668648 .1028657

2017 | .0872879 .0101555 8.60 0.000 .0667932 .1077827

2018 | .0892268 .0118061 7.56 0.000 .0654011 .1130525

2019 | .0842069 .0117343 7.18 0.000 .0605261 .1078876

|

stfips |

alaska | -.103853 1.04e-15 -1.0e+14 0.000 -.103853 -.103853

arizona | -.0412094 .0067381 -6.12 0.000 -.0548075 -.0276113

arkansas | -.0117976 .0067381 -1.75 0.087 -.0253957 .0018005

california | -.0416807 .0067381 -6.19 0.000 -.0552788 -.0280825

colorado | -.0107549 .0067381 -1.60 0.118 -.024353 .0028433

connecticut | .0482399 .0067381 7.16 0.000 .0346418 .061838

florida | -.0857497 1.04e-15 -8.3e+13 0.000 -.0857497 -.0857497

georgia | -.090137 1.04e-15 -8.7e+13 0.000 -.090137 -.090137

hawaii | .1102658 .0067381 16.36 0.000 .0966677 .1238639

idaho | -.0128005 1.04e-15 -1.2e+13 0.000 -.0128005 -.0128005

illinois | -.0163106 .0067381 -2.42 0.020 -.0299087 -.0027125

iowa | .0876154 .0067381 13.00 0.000 .0740173 .1012135

kansas | .0138945 1.04e-15 1.3e+13 0.000 .0138945 .0138945

kentucky | .0309765 .0067381 4.60 0.000 .0173784 .0445747

louisiana | -.0358099 1.04e-15 -3.5e+13 0.000 -.0358099 -.0358099

maine | .0656128 1.04e-15 6.3e+13 0.000 .0656128 .0656128

maryland | .0118266 .0067381 1.76 0.087 -.0017715 .0254247

michigan | .0349109 .0067381 5.18 0.000 .0213128 .048509

minnesota | .0884664 .0067381 13.13 0.000 .0748682 .1020645

mississippi | -.0424017 1.04e-15 -4.1e+13 0.000 -.0424017 -.0424017

missouri | .0185215 1.04e-15 1.8e+13 0.000 .0185215 .0185215

montana | .0016449 1.04e-15 1.6e+12 0.000 .0016449 .0016449

nebraska | .0465129 1.04e-15 4.5e+13 0.000 .0465129 .0465129

nevada | -.0688877 .0067381 -10.22 0.000 -.0824858 -.0552896

new jersey | -.0539224 .0067381 -8.00 0.000 -.0675205 -.0403243

new mexico | -.035146 .0067381 -5.22 0.000 -.0487441 -.0215479

north carolina | -.0214531 1.04e-15 -2.1e+13 0.000 -.0214531 -.0214531

north dakota | .0414656 .0067381 6.15 0.000 .0278675 .0550637

ohio | .0163148 .0067381 2.42 0.020 .0027167 .0299129

oklahoma | -.0662598 1.04e-15 -6.4e+13 0.000 -.0662598 -.0662598

oregon | -.0007891 .0067381 -0.12 0.907 -.0143872 .012809

rhode island | .0601783 .0067381 8.93 0.000 .0465801 .0737764

south carolina | -.0346476 1.04e-15 -3.3e+13 0.000 -.0346476 -.0346476

south dakota | .0173781 1.04e-15 1.7e+13 0.000 .0173781 .0173781

tennessee | -.0172016 1.04e-15 -1.7e+13 0.000 -.0172016 -.0172016

texas | -.1207823 1.04e-15 -1.2e+14 0.000 -.1207823 -.1207823

utah | -.0098695 1.04e-15 -9.5e+12 0.000 -.0098695 -.0098695

virginia | .0046849 1.04e-15 4.5e+12 0.000 .0046849 .0046849

washington | .0179123 .0067381 2.66 0.011 .0043142 .0315104

west virginia | .0310248 .0067381 4.60 0.000 .0174267 .044623

wisconsin | .0494254 .0067381 7.34 0.000 .0358273 .0630235

wyoming | -.0281642 1.04e-15 -2.7e+13 0.000 -.0281642 -.0281642

|

_cons | .6535443 .0051142 127.79 0.000 .6432234 .6638652

---------------------------------------------------------------------------------

. coefplot, omitted keep(t2*) vertical

. graph export DD_1.png, replace

(note: file DD_1.png not found)

(file DD_1.png written in PNG format)Solution to (f)

test t2008 t2009 t2010 t2011 t2012The output:

. test t2008 t2009 t2010 t2011 t2012

( 1) t2008 = 0

( 2) t2009 = 0

( 3) t2010 = 0

( 4) t2011 = 0

( 5) t2012 = 0

F( 5, 42) = 0.76

Prob > F = 0.5856I get a p-value of $.58$, which means we can’t reject the null hypothesis that treated and control states had parallel trends in 2008-2013. This increases my confidence in parallel trends holding in 2013-2019, though of course it is not a direct test of this.

Solution to (g)

Homework3.do

* Part (a)

use ehec_data.dta, clear

browse

tab year

* Part (b)

tab yexp2 if year == 2009, m

* Part (c)

gen treatment = .

replace treatment = 1 if yexp2 == 2014

replace treatment = 0 if yexp2 >= 2016

drop if treatment == .

tab treatment if year==2008, m

* Part (d)

gen y2014 = (year == 2014)

gen t_y2014 = y2014 * treatment

reg dins treatment y2014 t_y2014 if year == 2013 | year == 2014, cluster(stfips)

* Part (e)

forvalues yr = 2008/2019{

gen t`yr' = treatment * (year == `yr')

}

cap ssc install coefplot

replace t2013 = 0

reg dins t2008-t2012 t2013 t2014-t2019 i.year i.stfips, cluster(stfips)

coefplot, omitted keep(t2*) vertical

graph export DD_1.png, replace

* Part (f)

test t2008 t2009 t2010 t2011 t2012Lab 1

Basic STATA

Use the data gdbcn.csv: GDP of China in 1992-2003, performing the following operations using STATA.

Please write the corresponding STATA query statements for the following requirements based on the file mentioned.

1. Import the data

cd Lab1

import delimited using gdbcn.csv, encoding(GB2312). cd Lab1

Lab1

. import delimited using gdbcn.csv, encoding(GB2312)

(3 vars, 380 obs)2. How many observations are there?

count. count

380There are 380 observations.

3. How many variables are there, and what are their names?

describe. describe

Contains data

obs: 380

vars: 3

size: 5,700

--------------------------------------------------------------------------------------------

storage display value

variable name type format label variable label

--------------------------------------------------------------------------------------------

Thrhold_enddt str10 %10s Thrhold_EndDt

GDP_P_C_GDP~u float %9.0g GDP_P_C_GDP_Pric_Cumu

v3 byte %8.0g

--------------------------------------------------------------------------------------------

Sorted by:

Note: Dataset has changed since last saved.There are 2 variables, one named Thrhold_enddt, the other is GDP_P_C~u. v3 is a dummy variable, that is due to the bad format of the csv file.

4. What does the second variable mean? (Determine through its label).

GDP_Price_Cumulative

5. What is the mean of the Gross Domestic Product (GDP)?

summarize GDP_Pric_Cumu. summarize GDP_P_C

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

GDP_P_C_GD~u | 126 247994.2 271458.1 5262.8 1210207247994.2

6. Output the number of missing values for each variable.

misstable summarize. misstable summarize

Obs<.

+------------------------------

| | Unique

Variable | Obs=. Obs>. Obs<. | values Min Max

-------------+--------------------------------+------------------------------

GDP_P_C_GD~u | 254 126 | 126 5262.8 1210207

v3 | 380 0 | 0 . .

-----------------------------------------------------------------------------254 null values on the variable: GDP_P_C_GDP~u

Regressive Analysis

Using the data from HPRICE1, estimate the following model:$$\text{price} = \beta_0 + \beta_1 \cdot \text{sqrft} + \beta_2 \cdot \text{bdrms} + \mu$$

where price represents the housing price in thousands of dollars.

1. Write the result in equation form.

cd Lab1

use hprice1.dta, clear

describe

reg price sqrft bdrms. cd Lab1

Lab1

. use hprice1.dta, clear

. describe

Contains data from Lab1/hprice1.dta

obs: 88

vars: 10 17 Mar 2002 12:21

size: 2,816

-------------------------------------------------------------------------------

storage display value

variable name type format label variable label

-------------------------------------------------------------------------------

price float %9.0g house price, $1000s

assess float %9.0g assessed value, $1000s

bdrms byte %9.0g number of bdrms

lotsize float %9.0g size of lot in square feet

sqrft int %9.0g size of house in square feet

colonial byte %9.0g =1 if home is colonial style

lprice float %9.0g log(price)

lassess float %9.0g log(assess

llotsize float %9.0g log(lotsize)

lsqrft float %9.0g log(sqrft)

-------------------------------------------------------------------------------

Sorted by:

. reg price sqrft bdrms

Source | SS df MS Number of obs = 88

-------------+---------------------------------- F(2, 85) = 72.96

Model | 580009.152 2 290004.576 Prob > F = 0.0000

Residual | 337845.354 85 3974.65122 R-squared = 0.6319

-------------+---------------------------------- Adj R-squared = 0.6233

Total | 917854.506 87 10550.0518 Root MSE = 63.045

------------------------------------------------------------------------------

price | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

sqrft | .1284362 .0138245 9.29 0.000 .1009495 .1559229

bdrms | 15.19819 9.483517 1.60 0.113 -3.657582 34.05396

_cons | -19.315 31.04662 -0.62 0.536 -81.04399 42.414

------------------------------------------------------------------------------We thus give the model as $$\text{price} = -19.315 + 0.12844 \cdot \text{sqrft} + 15.198 \cdot \text{bdrms} + \mu$$

2. Estimate the increase in price when a bedroom is added without changing the area.

The coefficient for bdrms is $\beta_2 = 15.198$.

This means that adding one bedroom, while keeping square footage constant, is estimated to increase the price by $15,198.

3. Estimate the effect of adding a bedroom that is 140 square feet in size. Compare this result with the one obtained in part (2).

The total impact of adding a bedroom with 140 square feet is the sum of the effects of the additional square footage and the additional bedroom:$\Delta \text{price} = \beta_1 \cdot 140 + \beta_2$.

Substituting the coefficients:$\Delta \text{price} = 0.12844 \cdot 140 + 15.198 = 17.9816 + 15.198 = 33.1796$.

Thus, the price is estimated to increase by $33,180 when a bedroom with 140 square feet is added.

Comparison with Part 2:

The price increase from adding a bedroom with 140 square feet is higher than adding a bedroom alone because the additional square footage also adds value.

4. Determine the proportion of price variation explained by square footage and the number of bedrooms.

The $R^2$ value from the regression output is 0.6319.

This indicates that 63.19% of the variation in housing prices can be explained by the square footage ($\text{sqrft}$) and the number of bedrooms ($\text{bdrms}$) in the model.

5. Predict the sales price of the first house in the sample.

gen predicted_price = _b[_cons] + _b[sqrft]*sqrft + _b[bdrms]*bdrms

list predicted_price if _n==1. gen predicted_price = _b[_cons] + _b[sqrft]*sqrft + _b[bdrms]*bdrms

. list predicted_price if _n==1

+-----------------+

| predicted_price |

|-----------------|

1. | 354.6053 |

+-----------------+The predicted price is $354,605.

6. Given the actual price of $300,000 on the first house, compute the residual. Assess whether the buyer paid more or less based on the sign of the residual.

gen residual = price - predicted_price

list residual if _n == 1. gen residual = price - predicted_price

. list residual if _n == 1

+-----------+

| residual |

|-----------|

1. | -54.60526 |

+-----------+The residual is -$54,605, indicating the buyer paid less.

Lab 2

Data Visualization

Experiment Requirements:

- Complete the drawing of the two figures above (60%)

- Optimize the figures (e.g., titles, labels, coordinates, etc., you do not have to draw this exactly the same as the figures given) (30%)

- Analyze the visualization results (10%)

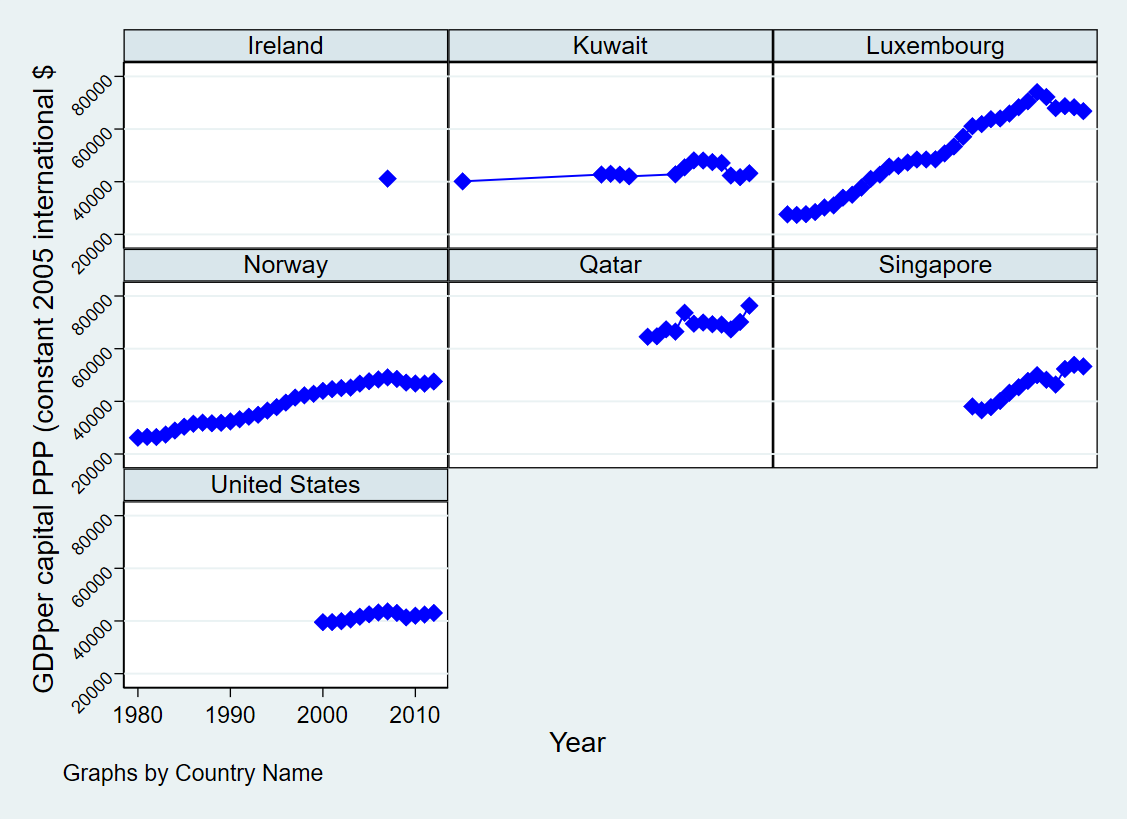

The first figure

The first figure

cd Lab2

use wdipol.dta, clear

describe

keep if inlist(country, "Ireland","Kuwait","Luxembourg","Norway","Qatar","Singapore","United States")

egen max_gdppc = max(gdppc) if country=="Ireland"

drop if country=="Ireland" & gdppc<max_gdppc

drop if (country=="Singapore" | country=="United States") & year<2000

sort country year

preserve

keep if country=="Kuwait"

sort year

scalar kuwait_first = gdppc[1]

restore

sort country year

replace gdppc = . if country=="Kuwait" & gdppc < kuwait_first & _n > 1

graph twoway (connected gdppc year, msymbol(diamond) mcolor(blue) lcolor(blue)), by(country, cols(3) compact note("Graphs by Country Name")) ytitle("GDPper capital PPP (constant 2005 international $") xtitle("Year") legend(off) yscale(range(40000 .)). cd Lab2

Lab2

. use wdipol.dta, clear

. describe

Contains data from wdipol.dta

obs: 4,542

vars: 12 25 Feb 2015 17:31

size: 381,528

--------------------------------------------------------------------------------------------------

storage display value

variable name type format label variable label

--------------------------------------------------------------------------------------------------

year int %10.0g Year

country str24 %24s Country Name

gdppc double %10.0g GDP per capita, PPP (constant 2005 international $)

unempf double %10.0g Unemployment, female (% of female labor force)

unempm double %10.0g Unemployment, male (% of male labor force)

unemp double %10.0g Unemployment, total (% of total labor force)

export double %10.0g Exports of goods and services (constant 2005 US$)

import double %10.0g Imports of goods and services (constant 2005 US$)

polity byte %8.0g polity (original)

polity2 byte %8.0g polity2 (adjusted)

trade float %9.0g Imports + Exports

id float %9.0g group(country)

-------------------------------------------------------------------------------------------------

Sorted by:

. keep if inlist(country, "Ireland","Kuwait","Luxembourg","Norway","Qatar","Singapore","United States")

(4,358 observations deleted)

. egen max_gdppc = max(gdppc) if country=="Ireland"

(171 missing values generated)

. drop if country=="Ireland" & gdppc<max_gdppc

(12 observations deleted)

. drop if (country=="Singapore" | country=="United States") & year<2000

(40 observations deleted)

. sort country year

. preserve

. keep if country=="Kuwait"

(105 observations deleted)

. sort year

. scalar kuwait_first = gdppc[1]

. restore

. sort country year

. replace gdppc = . if country=="Kuwait" & gdppc < kuwait_first & _n > 1

(13 real changes made, 13 to missing)

. graph twoway (connected gdppc year, msymbol(diamond) mcolor(blue) lcolor(blue)), by(country, cols(3) compact note("Graphs by

> Country Name")) ytitle("GDPper capital PPP (constant 2005 international $") xtitle("Year") legend(off) yscale(range(40000 .

> ))Then you can use the graph editor to modify the layout.

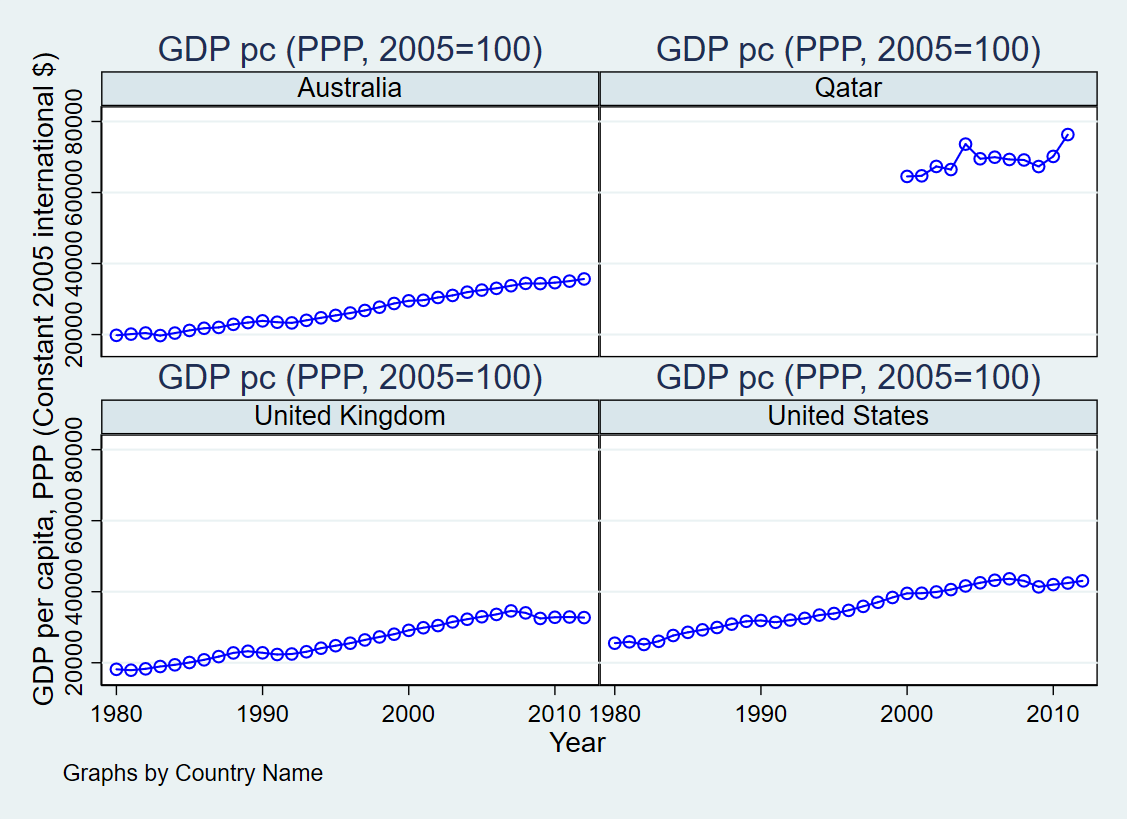

The second figure

The second figure

cd Lab2

use wdipol.dta, clearkeep if inlist(country, "Australia", "Qatar", "United Kingdom", "United States")

sort country year

graph twoway (connected gdppc year, msymbol(o) mcolor(blue) lcolor(blue)), by(country, rows(2) compact note("Graphs by Country Name")) title("GDP pc (PPP, 2005=100)") ytitle("GDP per capita, PPP (Constant 2005 international $)") xtitle("Year") legend(off). cd Lab2

Lab2

. use wdipol.dta, clear

. keep if inlist(country, "Australia", "Qatar", "United Kingdom", "United States")

(4,431 observations deleted)

. sort country year

. graph twoway (connected gdppc year, msymbol(o) mcolor(blue) lcolor(blue)), by(country, rows(2) compact note("Graphs by Count

> ry Name")) title("GDP pc (PPP, 2005=100)") ytitle("GDP per capita, PPP (Constant 2005 international $)") xtitle("Year") lege

> nd(off)Then you can use the graph editor to modify the layout.

Data Visualization in Econometrics

SLEEP75

Using the SLEEP75 data from Biddle and Hamermesh (1990), examine whether there is a trade-off between the time spent sleeping each week and the time spent on paid work. We can use either of these variables as the dependent variable.

1. Estimate the model: $$\text{sleep} = \beta_0 + \beta_1 \text{totwrk} + \mu$$

Where $\text{sleep}$ represents the number of minutes spent sleeping at night each week, and $\text{totwrk}$ represents the number of minutes spent on paid work during the same week. Report your results in equation form, along with the number of observations and $R^2$. What does the intercept in this equation represent?

cd Lab2

use SLEEP75.dta

describe sleep totwrk

reg sleep totwrk. cd Lab2

Lab2

. use SLEEP75.dta

. describe sleep totwrk

storage display value

variable name type format label variable label

------------------------------------------------------------------------------------------------------------------------------

sleep int %9.0g mins sleep at night, per wk

totwrk int %9.0g mins worked per week

. reg sleep totwrk

Source | SS df MS Number of obs = 706

-------------+---------------------------------- F(1, 704) = 81.09

Model | 14381717.2 1 14381717.2 Prob > F = 0.0000

Residual | 124858119 704 177355.282 R-squared = 0.1033

-------------+---------------------------------- Adj R-squared = 0.1020

Total | 139239836 705 197503.313 Root MSE = 421.14

------------------------------------------------------------------------------

sleep | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

totwrk | -.1507458 .0167403 -9.00 0.000 -.1836126 -.117879

_cons | 3586.377 38.91243 92.17 0.000 3509.979 3662.775

------------------------------------------------------------------------------From the results above, we have the model: $$\text{sleep} = 3586.377 – 0.1507458 \cdot \text{totwrk} + \mu$$

The intercept $\beta_0$ represents the expected number of minutes of nightly sleep per week when $\text{totwrk}= 0$. In other words, it reflects the predicted total weekly nighttime sleep in the absence of paid work.

2. If $\text{totwrk}$ increases by 2 hours, by how much is $\text{sleep}$ estimated to decrease? Do you think this is a significant effect?

If $\text{totwrk}$ increases by 2 hours, or 120 minutes, the estimated decrease in $\text{sleep}$ is calculated as:$\Delta \text{sleep} = \beta_1 \times 120 = -0.1507458 \times 120 \approx -18 \text{ minutes}$.

An additional 2 hours of work per week results in only an 18-minute reduction in sleep, which is not particularly significant. From a weekly perspective, this is seemingly a relatively small impact.

WAGE2

Using data from WAGE2, estimate a simple regression to explain monthly wages using intelligence quotient.

1. Calculate the average (here, you can use mean value to represent the average value) wage and the average IQ in the sample. What is the sample standard deviation of IQ? (In the population, IQ is standardized with a mean of 100 and a standard deviation of 15.)

use WAGE2.dta, clear

summarize wage IQ. use WAGE2.dta, clear

. summarize wage IQ

Variable | Obs Mean Std. Dev. Min Max

-------------+---------------------------------------------------------

wage | 935 957.9455 404.3608 115 3078

IQ | 935 101.2824 15.05264 50 145Mean wage : 957.9455

Mean IQ : 101.2824

Standard deviation of IQ : 15.05264

2. Estimate a simple regression model where an increase of one unit in IQ results in a specific change in wage. Using this model, calculate the expected change in wages when IQ increases by 15 units. Does IQ explain most of the variation in wages?

Here, we use Linear Model.

reg wage IQ. reg wage IQ

Source | SS df MS Number of obs = 935

-------------+---------------------------------- F(1, 933) = 98.55

Model | 14589782.6 1 14589782.6 Prob > F = 0.0000

Residual | 138126386 933 148045.429 R-squared = 0.0955

-------------+---------------------------------- Adj R-squared = 0.0946

Total | 152716168 934 163507.675 Root MSE = 384.77